[ad_1]

Suppose twice earlier than posting that selfie on Fb; you may be added to a police database.

With all the joy round AI, due to ChatGPT, many are cheering for this expertise and loving its optimizing powers. Sadly, this onion has many layers; the deeper we go, the stinkier it will get.

We are able to checklist dozens of causes to concern AI, such because it turning into extra clever than people and doubtlessly result in sudden and uncontrollable outcomes, job displacement, weaponization, and so many others. Nevertheless, there may be already one situation that’s rearing its ugly head. Privateness violations are rising and inflicting harm.

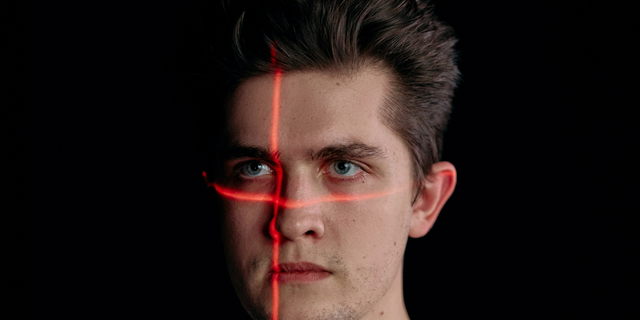

Clearview AI’s software program makes use of synthetic intelligence algorithms to research photos of faces and match them in opposition to a database of over 3 billion photographs which were scraped from varied sources, together with social media platforms like Fb, Instagram, and Twitter, all with out the customers’ permission. (Kurt Knutsson)

Unveiling the identification of probably the most infamous facial recognition firm you’ve got by no means heard of

The AI tech firm Clearview AI, has made headlines for its misuse of client information. It supplies facial recognition software program to regulation enforcement companies, non-public corporations and different organizations. Their software program makes use of synthetic intelligence algorithms to research photos of faces and match them in opposition to a database of over 3 billion photographs which were scraped from varied sources, together with social media platforms like Fb, Instagram and Twitter, all with out the customers’ permission. The corporate has already been fined hundreds of thousands of {dollars} in Europe and Australia for such privateness breaches.

AI CHATBOT CHATGPT CAN INFLUENCE HUMAN MORAL JUDGMENTS, STUDY SAYS

Why regulation enforcement is Clearview AI’s greatest buyer

Each time the police have a photograph of a suspect, they’ll evaluate it to your face, which many individuals discover invasive. (Kurt Knutsson)

Critics of the corporate argue that the usage of its software program by police places everybody right into a “perpetual police lineup.” Each time the police have a photograph of a suspect, they’ll evaluate it to your face, which many individuals discover invasive.

Clearview AI’s system permits regulation enforcement to add a photograph of a face and discover matches in a database of billions of photos that the corporate has collected. It then supplies hyperlinks to the place matching photos seem on-line. The system is thought to be some of the highly effective and correct facial recognition instruments in the world.

Clearview AI has made headlines for its misuse of client information. It supplies facial recognition software program to regulation enforcement companies, non-public corporations and different organizations. (Kurt Knutsson)

Regardless of being banned from promoting its companies to most U.S. corporations resulting from breaking privacy laws, Clearview AI has an exemption for the police. The corporate’s CEO, Hoan Ton-That, says a whole lot of police forces throughout the U.S. use its software program.

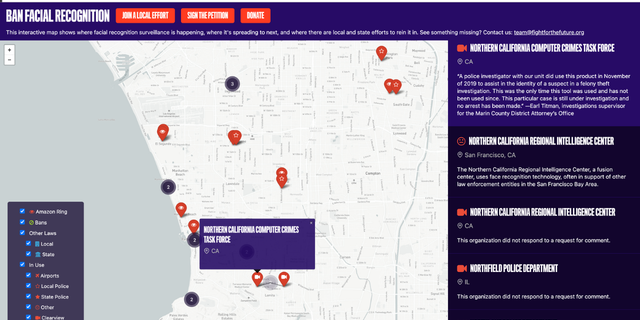

You possibly can go to this website to see if police stations in your neighborhood are utilizing this tech.

You possibly can go to this web site to see if police stations in your neighborhood are utilizing this tech. (banfacialrecognition.com)

Usually, nevertheless, police solely make the most of Clearview AI’s facial recognition expertise for critical or violent crimes, excluding the Miami Police, who’ve brazenly admitted to utilizing the software program for each sort of crime.

HOW TO STOP FACIAL RECOGNITION CAMERAS FROM MONITORING YOUR EVERY MOVE

What are the risks of utilizing this facial ID software program?

Clearview AI claims to have a close to 100% accuracy fee, but these figures are sometimes based mostly on mugshots. The system’s accuracy is dependent upon the standard of the pictures fed into it, which has critical unfavourable ramifications. As an example, the algorithm can confuse two totally different people and supply a constructive identification for regulation enforcement when in reality, the individual in query is completely harmless, and the facial ID was flawed.

Clearview AI claims to have a close to 100% accuracy fee, but these figures are sometimes based mostly on mugshots. (Kurt Knutsson)

Civil rights campaigners need police forces that use Clearview to brazenly say when it’s used and for its accuracy to be brazenly examined in courtroom. They need the algorithm scrutinized by unbiased specialists and are skeptical of the corporate’s claims. This might help guarantee those that are prosecuted due to this expertise are, in reality, responsible and never victims of a flawed algorithm.

HOW TO STOP GOOGLE FROM ITS CREEPY WAY OF USING YOU FOR FACIAL RECOGNITION

Closing Ideas

Regardless of some circumstances the place Clearview AI is confirmed to have labored for a defendant, equivalent to within the case of Andrew Conlyn from Fort Myers, Florida, who had prices in opposition to him dropped after Clearview AI was used to discover a essential witness, critics consider the usage of the software program comes at too excessive a worth for civil liberties and civil rights. Whereas this expertise could also be used to assist some folks, its intrusive nature is terrifying.

CLICK HERE TO GET THE FOX NEWS APP

Would you help this expertise in case you knew it might assist you to win a case, or are its ramifications too harmful? Tell us! We might love to listen to from you.

For extra of my suggestions, subscribe to my free CyberGuy Report E-newsletter by clicking the “Free e-newsletter” hyperlink on the high of my web site.

Copyright 2023 CyberGuy.com. All rights reserved.

Source link