[ad_1]

OpenAI CEO Sam Altman testified earlier than Congress in Washington, D.C., this week about regulating artificial intelligence in addition to his private fears over the tech and what “scary” AI techniques means to him.

Fox Information Digital requested OpenAI’s wildly well-liked chatbot, ChatGPT, to additionally weigh in on examples of “scary” synthetic intelligence techniques, and it reported six hypothetical cases of how AI might grow to be weaponized or have doubtlessly dangerous impacts on society.

When requested by Fox Information Digital on Tuesday after his testimony earlier than a Senate Judiciary subcommittee, Altman gave examples of “scary AI” that included techniques that might design “novel organic pathogens.”

“An AI that might hack into laptop techniques,” he continued. “I believe these are all scary. These techniques can grow to be fairly highly effective, which is why I used to be comfortable to be right here at the moment and why I believe that is so vital.”

OPENAI CEO SAM ALTMAN REVEALS WHAT HE THINKS IS ‘SCARY’ ABOUT AI

OpenAI CEO Sam Altman speaks with the press after his testimony earlier than a Senate Judiciary subcommittee on Might 16, 2023. (Fox Information)

Fears have unfold amongst some tech experts and leaders, in addition to members of the general public, that synthetic intelligence might develop so educated and highly effective that it might wipe out society.

The “godfather of synthetic intelligence,” laptop scientist Geoffrey Hinton, for instance, warned final month that “it isn’t inconceivable” AI might wipe “out humanity.” His remarks got here after he stop his job at Google, saying he regrets his life’s work as a result of how AI might be misused.

Superior autonomous weapon system

When requested for examples of “scary AI,” ChatGPT responded, “These [autonomous weapon systems], sometimes called ‘killer robots’ or ‘deadly autonomous weapons,’ elevate moral considerations and the potential for misuse or unintended penalties.” (Costfoto / Future Publishing by way of Getty Photos)

Following Altman’s feedback on scary AI and different tech leaders sounding the alarm on misused AI, Fox Information Digital requested ChatGPT on Wednesday to offer an instance of “scary AI.”

“An instance of ‘scary AI’ is a complicated autonomous weapon system that may independently establish and assault targets with out human intervention,” the chatbot responded. “These techniques, sometimes called ‘killer robots’ or ‘deadly autonomous weapons,’ elevate moral considerations and the potential for misuse or unintended penalties.”

ChatGPT stated to “think about a situation the place such an AI-powered weapon system is programmed with a set of standards to establish and eradicate potential threats.” If such a system malfunctioned or wound up within the arms of unhealthy actors, “it might result in indiscriminate focusing on, inflicting widespread destruction and lack of life.”

The chatbot added this was only one instance of “how AI, if not correctly managed or guided by human values, can have doubtlessly alarming implications.”

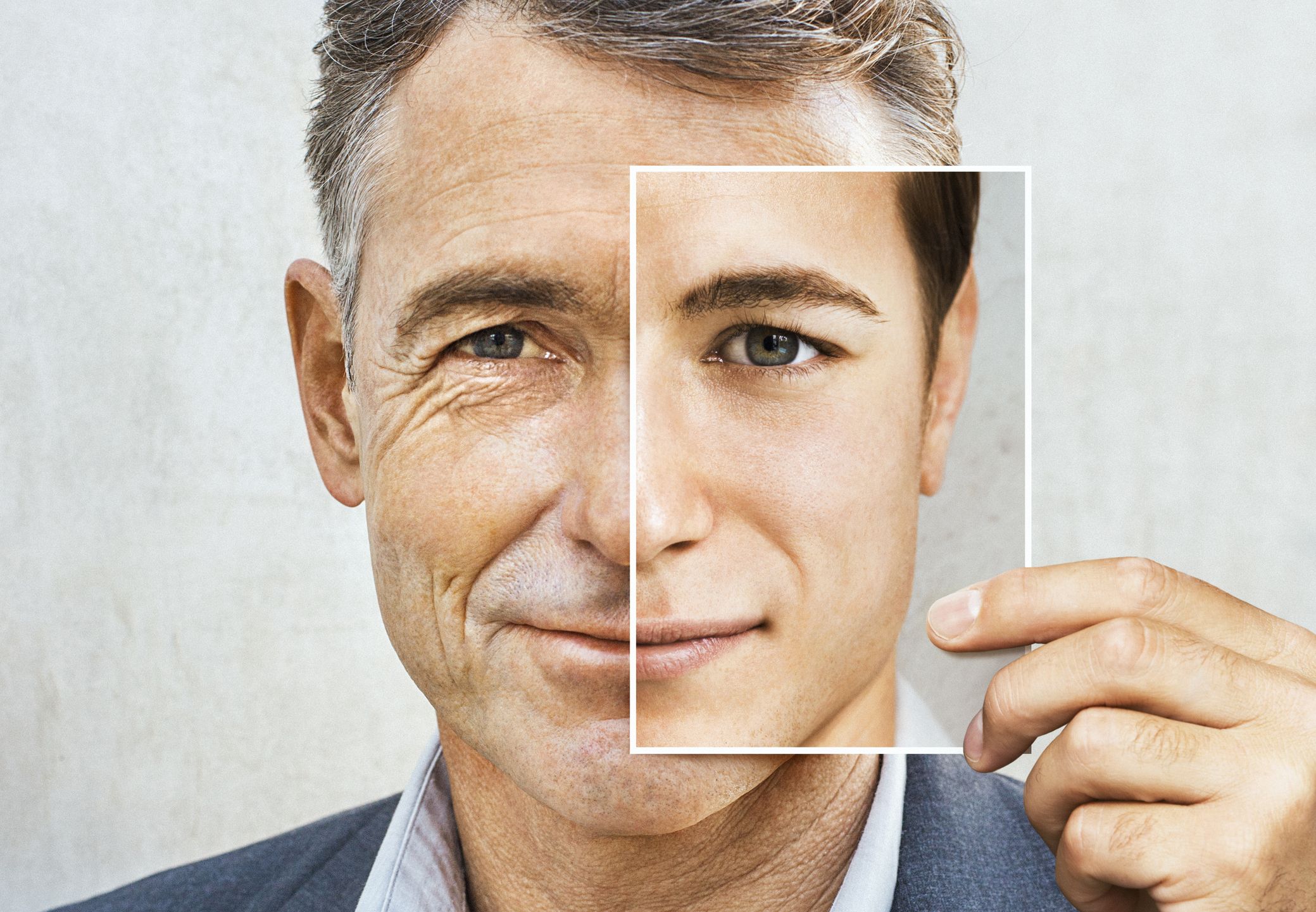

Deepfakes

Fears have unfold amongst some tech specialists and leaders, in addition to members of the general public, that synthetic intelligence might develop so educated and highly effective that it might wipe out society. (Reuters / Dado Ruvic / Illustration)

Fox Information Digital requested the chatbot to offer further examples of “scary AI,” and the second instance supplied was “deepfakes,” which regularly contain enhancing movies or pictures of individuals to make them seem like another person by utilizing deep-learning AI.

“This know-how might be misused for spreading disinformation, blackmail or manipulating public opinion. Deepfakes have the potential to undermine belief, create social unrest and injury reputations,” ChatGPT said.

Deepfakes hit the general public’s radar in 2017 after a Reddit person posted realistic-looking pornography of celebrities to the platform. Deepfakes have since grow to be extra broadly used and convincing, and so they have led to phony movies, comparable to comic Jerry Seinfeld starring within the basic 1994 crime drama “Pulp Fiction” or rapper Snoop Dogg showing in a pretend infomercial selling tarot card readings.

CRITICS SAY AI CAN THREATEN HUMANITY, BUT CHATGPT HAS ITS OWN DOOMSDAY PREDICTIONS

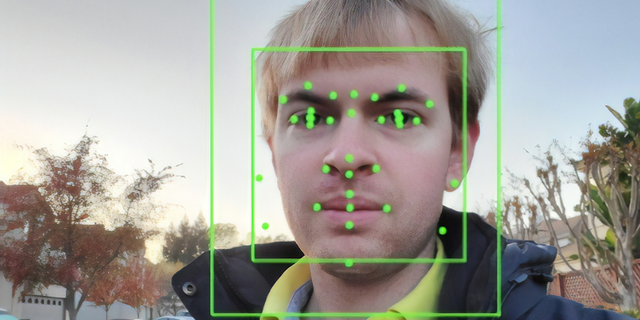

AI-powered surveillance

ChatGPT additionally listed “AI-powered surveillance” techniques utilized by unhealthy actors, comparable to “authoritarian regimes,” for example of “scary AI.” (Smith Assortment / Gado / Getty Photos / File)

The chatbot subsequent listed “AI-powered surveillance” techniques utilized by unhealthy actors, comparable to “authoritarian regimes,” for example of “scary AI.”

“Using AI in surveillance techniques can elevate considerations about privateness and civil liberties,” the chatbot stated. “Superior facial recognition algorithms mixed with surveillance cameras can allow mass monitoring and identification of people, resulting in potential abuses by authoritarian regimes or invasive monitoring of residents.”

Within the U.S., some police departments and personal companies have employed AI-powered facial recognition techniques in an effort to curb crime. Using the tech has include some notable failures, together with a handful of lawsuits from individuals who stated they have been fraudulently arrested when the techniques misidentified a suspect in a criminal offense case.

Social engineering bots

Synthetic Intelligence is going through a debate over regulation. (iStock)

ChatGPT stated chatbots themselves pose a risk to society and people, arguing that “chatbots and social engineering algorithms” might be skilled to “manipulate human habits and exploit vulnerabilities.”

“These bots can be utilized for phishing assaults, spreading propaganda or influencing public opinion by social media platforms, amplifying the potential for misinformation and societal division,” the chatbot stated.

When requested to elaborate on the threats chatbots might pose, ChatGPT stated that such know-how has the flexibility to imitate human conversations and “convincingly work together with people.” Chatbots might then “exploit psychological vulnerabilities” of people by analyzing their responses, doubtlessly resulting in people divulging delicate private data or shopping for into false data from the bots, or the techniques might manipulate human “feelings for malicious functions.”

OPENAI CEO SAM ALTMAN INVITES FEDERAL REGULATION ON ARTIFICIAL INTELLIGENCE

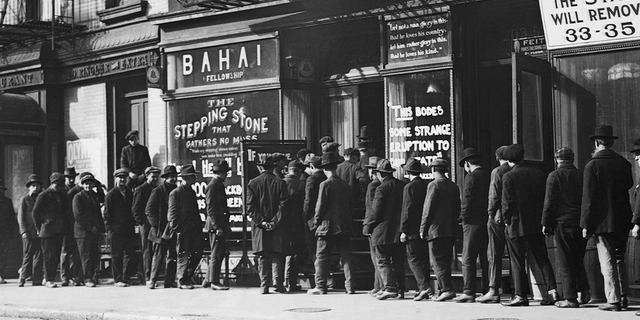

Unemployment and financial disparity

Unemployed males line up for espresso and bread at a soup kitchen in New York Metropolis circa 1930. (FPG / Hulton Archive / Getty Photos)

ChatGPT argued that as synthetic intelligence techniques grow to be extra highly effective, they may take over jobs usually occupied by people, which might widen “financial inequality.”

“There are considerations about job displacement and widening financial inequality,” ChatGPT stated. “AI-driven techniques and robots changing human employees in varied industries might result in vital job losses and social disruptions if not correctly managed or accompanied by measures to retrain and reskill the workforce.”

A report from Goldman Sachs earlier this yr discovered that generative AI might change and have an effect on 300 million jobs world wide. One other research from outplacement and govt teaching agency Challenger, Grey & Christmas discovered that ChatGPT might change no less than 4.8 million American jobs.

The studies have triggered some nervousness amongst employees, together with in fields almost certainly affected by the know-how, comparable to customer support representatives, however proponents of the technology say the proliferation of AI might result in the event of recent jobs and a strengthened economic system.

OPENAI CEO SAM ALTMAN ADMITS HIS BIGGEST FEAR FOR AI: ‘IT CAN GO QUITE WRONG’

AI bias and discrimination

ChatGPT stated AI techniques can perpetuate bias and discrimination primarily based on how it’s skilled, which might amplify “present societal inequalities.”

“AI techniques can inadvertently perpetuate biases current within the knowledge they’re skilled on, resulting in discriminatory outcomes,” ChatGPT stated. “For instance, biased algorithms in hiring processes or predictive policing techniques might perpetuate systemic biases and unfair remedy of sure teams, amplifying present societal inequalities.”

ChatGPT can typically “hallucinate,” that means it may well reply to a question with a solution that sounds appropriate however is definitely made up. Considerations over hallucinations and political bias in AI techniques has intensified in latest months, with tech big Elon Musk telling Fox Information final month that Silicon Valley programmers “are coaching the AI to lie.”

WHO IS SAM ALTMAN? THE TECH LEADER BEHIND ARTIFICIAL INTELLIGENCE LAB OPENAI

ChatGPT can typically “hallucinate,” that means it may well reply to a question with a solution that sounds appropriate however is definitely made up. (Getty Photos / File)

ChatGPT highlighted in its responses about “scary AI” that although its examples shine a light-weight on the “potential detrimental implications of AI, additionally they replicate the significance of responsible development, regulation and moral concerns to mitigate such dangers and make sure the useful and protected use of AI applied sciences.”

OPENAI CEO SAM ALTMAN FACES SENATE PANEL AS PRESSURE BUILDS TO REGULATE AI

Altman stated during the Senate hearing Tuesday that his biggest worry as OpenAI develops synthetic intelligence is that it causes main dangerous disruptions for folks.

OpenAI CEO Sam Altman takes his seat earlier than the beginning of the Senate Judiciary subcommittee listening to on AI, Tuesday, Might 16, 2023. (Invoice Clark / CQ-Roll Name Inc. by way of Getty Photos)

“My worst fears are that we trigger vital – we, the sphere, the technology industry – trigger vital hurt to the world,” Altman stated. “I believe that might occur in plenty of alternative ways. It’s why we began the corporate.”

“I believe if this know-how goes incorrect, it may well go fairly incorrect, and we need to be vocal about that,” he added. “We need to work with the federal government to forestall that from taking place.”

CLICK HERE TO GET THE FOX NEWS APP

Altman stated throughout the listening to that he invitations the chance to work with U.S. lawmakers on crafting rules for AI that will assist forestall undesirable outcomes with the tech.

[ad_2]

Source link