[ad_1]

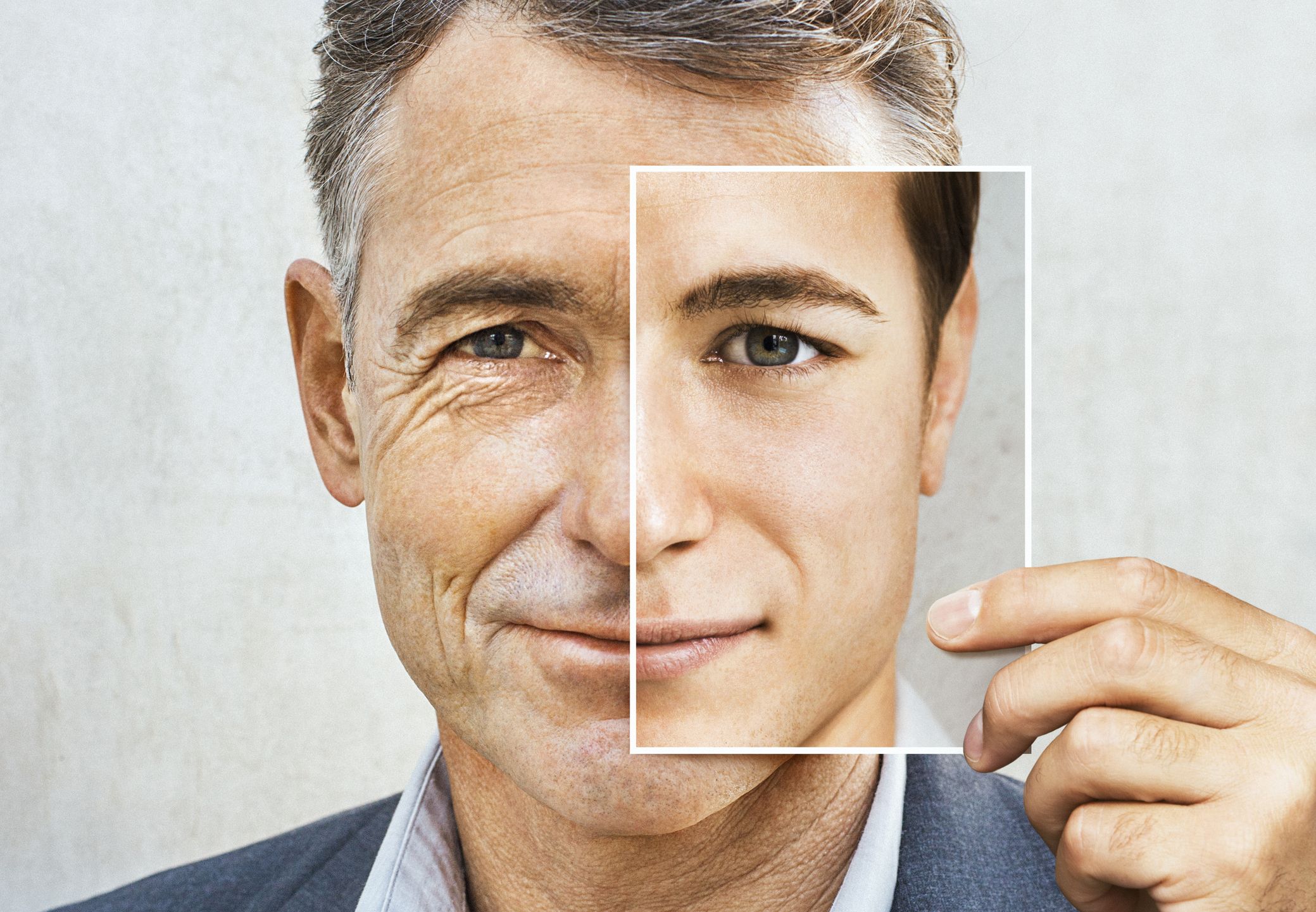

Photographs generated by artificial intelligence are changing into extra convincing and prevalent, and so they may result in extra sophisticated court docket circumstances if the artificial media is submitted as proof, authorized specialists say.

“Deepfakes” usually contain enhancing movies or images of individuals to make them appear to be another person through the use of deep-learning AI. The know-how broadly hit the general public’s radar in 2017 after a Reddit consumer posted realistic-looking pornography of celebrities to the platform.

The pornography was revealed to be doctored, however the revolutionary tech has solely develop into extra practical and simpler to make within the years since.

For authorized specialists, deepfakes and AI-generated photographs and movies will doubtless trigger large complications within the courts – which have bracing for this know-how for years. The ABA Journal, the flagship publication of the American Bar Affiliation, revealed a publish in 2020 that warned how courts the world over had been scuffling with the proliferation of deepfake photographs and movies submitted as proof.

HOW DEEPFAKES ARE ON VERGE OF DESTROYING POLITICAL ACCOUNTABILITY

Photographs generated by synthetic intelligence have gotten extra convincing and prevalent, and so they may result in extra sophisticated court docket circumstances if the artificial media is submitted as proof, authorized specialists say. (Reuters / Dado Ruvic / Illustration)

“If an image’s value a thousand phrases, a video or audio might be value one million,” Jason Lichter, a member of the ABA’s E-Discovery and Digital Proof Committee, advised the ABA Journal on the time. “Due to how a lot weight is given to a video by a factfinder, the dangers related to deepfakes are that rather more profound.”

Deepfakes submitted as proof in a court case have already cropped up. Within the U.Ok., a lady who accused her husband of being violent throughout a custody battle was discovered to have doctored audio she submitted as proof of his violence.

CALIFORNIA BILL WOULD CRIMINALIZE AI-GENERATED PORN WITHOUT CONSENT

Two males charged with collaborating within the Jan. 6, 2021, Capitol riot claimed that video of the incident could have been created by AI. And legal professionals for Elon Musk’s Tesla just lately argued {that a} video of Musk from 2016, which appeared to point out him touting the self-driving options of the automobiles, might be a deepfake after a household of a person who died in a Tesla sued the corporate.

A professor at Newcastle College within the U.Ok. argued in an essay final 12 months that deepfakes may develop into an issue for low-level offenses working their means via the courts.

“Though political deepfakes seize the headlines, deepfaked proof in fairly low-level authorized circumstances – reminiscent of parking appeals, insurance coverage claims or household tussles – may develop into an issue in a short time,” regulation professor Lillian Edwards wrote in December. “We are actually originally of dwelling in that future.”

Edwards pointed to how deepfakes utilized in felony circumstances will doubtless additionally develop into extra prevalent, citing a BBC report on how cybercriminals used deepfake audio to swindle three unsuspecting enterprise executives into transferring tens of millions of {dollars} in 2019.

Synthetic Intelligence is hacking information within the close to future. (iStock)

Within the U.S. this 12 months, there have been a handful of warnings from native police departments about criminals utilizing AI to attempt to get ransom cash out of households. In Arizona, a mom stated final month that criminals used AI to imitate her daughter’s voice to demand $1 million.

The ABA Journal reported in 2020 that because the technology becomes more powerful and convincing, forensic specialists will probably be saddled with the tough process of deciphering what’s actual. This will entail analyzing the images of movies for inconsistencies, such because the presence of shadows or authenticating photographs instantly from a digital camera’s supply.

MUSK WARNS OF AI’S IMPACT ON ELECTIONS, CALLS FOR US OVERSIGHT: ‘THINGS ARE GETTING WEIRD … FAST’

With the rise of the brand new know-how additionally comes the fear that individuals will extra readily declare any proof was AI-generated.

The Supreme Court docket (AP Photograph / Jacquelyn Martin / File)

CRYPTO CRIMINALS BEWARE: AI IS AFTER YOU

“That is precisely what we had been involved about: That after we entered this age of deepfakes, anyone can deny actuality,” Hany Farid, a digital forensics skilled and professor on the College of California, Berkeley, advised NPR this week.

Earlier this 12 months, 1000’s of tech specialists and leaders signed an open letter that referred to as for a pause on analysis at AI labs so policymakers and trade leaders may craft security precautions for the know-how. The letter argued that highly effective AI techniques may pose a menace to humanity, and it additionally famous that the techniques threaten the unfold of “propaganda.”

CLICK HERE TO GET THE FOX NEWS APP

“Up to date AI techniques are actually changing into human-competitive at normal duties, and we should ask ourselves: Ought to we let machines flood our data channels with propaganda and untruth?” states the letter, which was signed by tech leaders reminiscent of Musk and Apple co-founder Steve Wozniak.

[ad_2]

Source link