[ad_1]

Because the artificial intelligence practice barrels on with no indicators of slowing down — some research have even predicted that AI will develop by greater than 37% per yr between now and 2030 — the World Well being Group (WHO) has issued an advisory calling for “secure and moral AI for well being.”

The company really helpful warning when utilizing “AI-generated giant language mannequin instruments (LLMs) to guard and promote human well-being, human security and autonomy, and protect public well being.”

ChatGPT, Bard and Bert are at the moment a number of the hottest LLMs.

In some circumstances, the chatbots have been proven to rival actual physicians when it comes to the standard of their responses to medical questions.

Whereas the WHO acknowledges that there’s “vital pleasure” in regards to the potential to make use of these chatbots for health-related wants, the group underscores the necessity to weigh the dangers rigorously.

“This consists of widespread adherence to key values of transparency, inclusion, public engagement, professional supervision and rigorous analysis.”

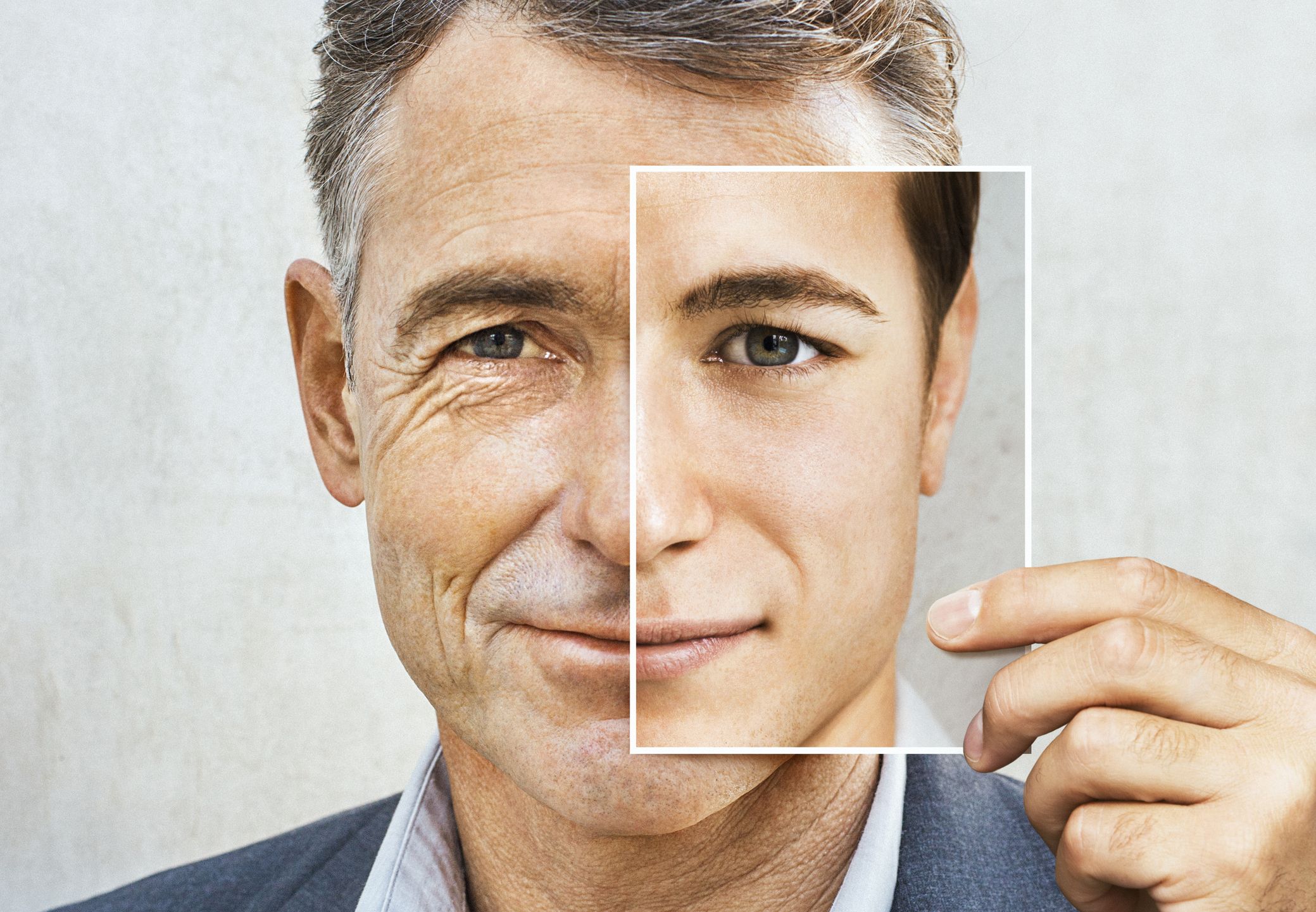

The World Well being Group (WHO) has issued an advisory calling for “secure and moral AI for well being.” (iStock)

The company warned that adopting AI methods too shortly with out thorough testing might end in “errors by well being care staff” and will “trigger hurt to sufferers.”

WHO outlines particular considerations

In its advisory, WHO warned that LLMs like ChatGPT may very well be skilled on biased information, doubtlessly “producing deceptive or inaccurate info that would pose dangers to well being fairness and inclusiveness.”

“Utilizing warning is paramount to affected person security and privateness.”

There may be additionally the danger that these AI fashions might generate incorrect responses to well being questions whereas nonetheless coming throughout as assured and authoritative, the company stated.

“LLMs could be misused to generate and disseminate extremely convincing disinformation within the type of textual content, audio or video content material that’s tough for the general public to distinguish from dependable well being content material,” WHO said.

There may be the danger that AI fashions might generate incorrect responses to well being questions — whereas nonetheless coming throughout as assured and authoritative, the company stated. (iStock)

One other concern is that LLMs may be skilled on information with out the consent of those that initially offered it — and that it might not have the right protections in place for the delicate information that sufferers enter when in search of recommendation.

“LLMs generate information that seem correct and definitive however could also be utterly misguided.”

“Whereas dedicated to harnessing new applied sciences, together with AI and digital well being, to enhance human well being, WHO recommends that policy-makers guarantee affected person security and safety whereas expertise companies work to commercialize LLMs,” the group stated.

AI professional weighs dangers, advantages

Manny Krakaris, CEO of the San Francisco-based well being expertise firm Augmedix, stated he helps the WHO’s advisory.

“This can be a shortly evolving matter and utilizing warning is paramount to affected person security and privateness,” he informed Fox Information Digital in an e-mail.

NEW AI TOOL HELPS DOCTORS STREAMLINE DOCUMENTATION AND FOCUS ON PATIENTS

Augmedix leverages LLMs, together with different applied sciences, to supply medical documentation and information options.

“When used with applicable guardrails and human oversight for high quality assurance, LLMs can convey quite a lot of effectivity,” Krakaris stated. “For instance, they can be utilized to offer summarizations and streamline giant quantities of information shortly.”

The company really helpful warning when utilizing “AI-generated giant language mannequin instruments (LLMs) to guard and promote human well-being, human security and autonomy, and protect public well being.” (iStock)

He did spotlight some potential risks, nonetheless.

“Whereas LLMs can be utilized as a supportive device, medical doctors and sufferers can’t depend on LLMs as a standalone resolution,” Krakaris stated.

“LLMs generate information that seem correct and definitive however could also be utterly misguided, as WHO famous in its advisory,” he continued. “This could have catastrophic penalties, particularly in well being care.”

CLICK HERE TO SIGN UP FOR OUR HEALTH NEWSLETTER

When creating its ambient medical documentation providers, Augmedix combines LLMs with computerized speech recognition (ASR), pure language processing (NLP) and structured information fashions to assist make sure the output is correct and related, Krakaris stated.

AI has ‘promise’ however requires warning and testing

Krakaris stated he sees quite a lot of promise for the usage of AI in health care, so long as these applied sciences are used with warning, correctly examined and guided by human involvement.

CLICK HERE TO GET THE FOX NEWS APP

“AI won’t ever totally change individuals, however when used with the right parameters to make sure that high quality of care is just not compromised, it will possibly create efficiencies, finally supporting a number of the greatest points that plague the well being care business at the moment, together with clinician shortages and burnout,” he stated.

[ad_2]

Source link