[ad_1]

After a two-year decline, U.S. suicide charges spiked once more in 2021, in response to a brand new report from the Facilities for Illness Management and Prevention (CDC).

Suicide is now the eleventh main reason for demise within the nation — and the second amongst folks between 10 and 35 years of age and fifth amongst these aged 35 to 54, per the report.

As the necessity for psychological well being care escalates, the U.S. is combating a scarcity of suppliers. To assist fill this hole, some medical know-how corporations have turned to artificial intelligence as a method of probably making suppliers’ jobs simpler and affected person care extra accessible.

CHATGPT FOR HEALTH CARE PROVIDERS: CAN THE AI CHATBOT MAKE THE PROFESSIONALS’ JOBS EASIER?

But there are caveats related to this. Learn on.

The state of psychological well being care

Over 160 million folks at present reside in “mental health skilled scarcity areas,” in response to the Well being Assets and Providers Administration (HRSA), an company of the U.S. Division of Well being and Human Providers.

By 2024, it’s anticipated that the entire variety of psychiatrists will attain a brand new low, with a projected scarcity of between 14,280 and 31,091 people.

“Lack of funding from the federal government, a scarcity of suppliers, and ongoing stigma relating to psychological well being therapy are a few of the largest boundaries,” Dr. Meghan Marcum, chief psychologist at AMFM Healthcare in Orange County, California, informed Fox Information Digital.

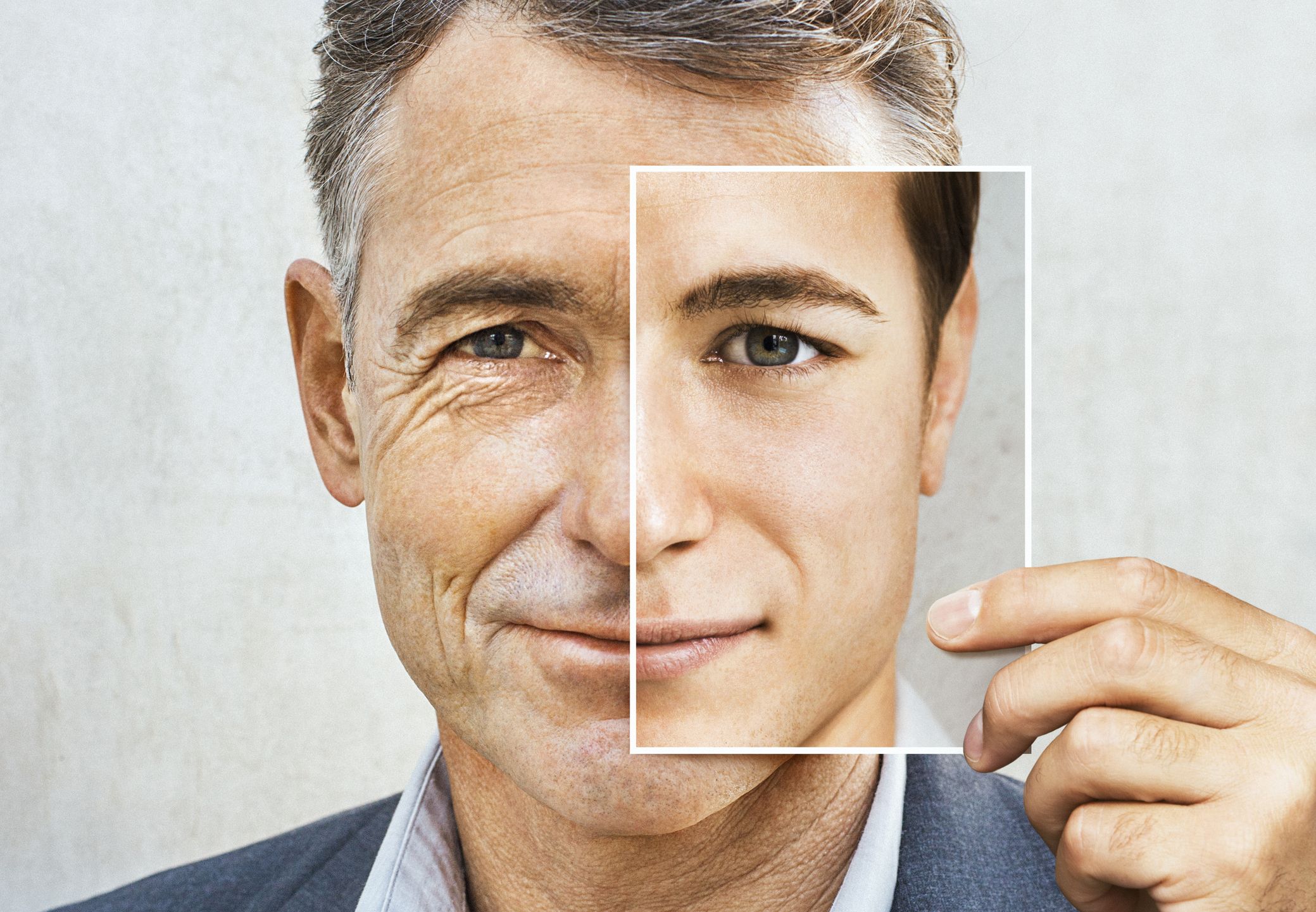

Some medical tech corporations have turned to synthetic intelligence as a method of enhancing suppliers’ jobs and making affected person care extra accessible. (iStock)

“Wait lists for remedy may be lengthy, and a few people want specialised companies like habit or eating disorder treatment, making it onerous to know the place to begin in the case of discovering the suitable supplier,” Marcum additionally stated.

Elevating psychological well being care with AI

A Boston, Massachusetts medical knowledge firm referred to as OM1 just lately constructed an AI-based platform, referred to as PHenOM, for physicians.

The software pulls knowledge from over 9,000 clinicians working in 2,500 places throughout all 50 states, in response to Dr. Carl Marci, chief psychiatrist and managing director of psychological well being and neuroscience at OM1.

Over 160 million folks reside in “psychological well being skilled scarcity areas.”

Physicians can use that knowledge to trace tendencies in depression, anxiety, suicidal tendencies and different psychological well being problems, the physician stated.

“A part of the explanation we’re having this psychological well being disaster is that we have not been capable of deliver new instruments, applied sciences and coverings to the bedside as shortly as we’d like,” stated Dr. Marci, who has additionally been operating a small medical observe via Mass Basic Brigham in Boston for 20 years.

Finally, synthetic intelligence may assist sufferers get the care they want quicker and extra effectively, he stated.

Can AI assist cut back suicide threat?

OM1’s AI mannequin analyzes 1000’s of affected person data and makes use of “subtle medical language fashions” to establish which people have expressed suicidal tendencies or really tried suicide, Dr. Marci stated.

“We will take a look at all of our knowledge and start to construct fashions to foretell who’s in danger for suicidal ideation,” he stated. “One strategy could be to search for explicit outcomes — on this case, suicide — and see if we will use AI to do a greater job of figuring out sufferers in danger after which directing care to them.”

Within the conventional psychological well being care mannequin, a affected person sees a psychiatrist for depression, anxiousness, PTSD, insomnia or one other dysfunction.

The physician then makes a therapy suggestion primarily based solely on his or her personal expertise and what the affected person says, Dr. Marci stated.

CHATGPT AND HEALTH CARE: COULD THE AI CHATBOT CHANGE THE PATIENT EXPERIENCE?

“Quickly, I am going to be capable of put some data from the chart right into a dashboard, which can then generate three concepts which are extra more likely to be extra profitable for despair, anxiousness or insomnia than my greatest guess,” he informed Fox Information Digital.

“The pc will be capable of examine these parameters that I put into the system for the affected person … in opposition to 100,000 comparable sufferers.”

In seconds, the physician would be capable of entry data to make use of as a decision-making software to enhance affected person outcomes, he stated.

‘Filling the hole’ in psychological well being care

When sufferers are within the psychological well being system for a lot of months or years, it’s vital for docs to have the ability to observe how their illness is progressing — which the actual world doesn’t at all times seize, Dr. Marci famous.

Medical doctors want to have the ability to observe how the sufferers’ illness is progressing — which the actual world doesn’t at all times seize, stated Dr. Marci of Boston. (iStock)

“The flexibility to make use of computer systems, AI and knowledge science to do a medical evaluation of the chart with out the affected person answering any questions or the clinician being burdened fills in lots of gaps,” he informed Fox Information Digital.

“We will then start to use different fashions to look and see who’s responding to therapy, what sorts of therapy they’re responding to and whether or not they’re getting the care they want,” he added.

Advantages and dangers of ChatGPT in psychological well being care

With the growing psychological well being challenges and the widespread scarcity of psychological well being suppliers, Dr. Marci stated he believes that docs will start using ChatGPT — the AI-based giant language mannequin that OpenAI launched in 2022 — as a “giant language mannequin therapist,” permitting docs to work together with sufferers in a “clinically significant means.”

Probably, fashions resembling ChatGPT may function an “off-hours” useful resource for many who need assistance in the midst of the evening or on a weekend after they can’t get to the physician’s workplace — “as a result of psychological well being would not take a break,” Dr. Marci stated.

These fashions are usually not with out dangers, the physician admitted.

“The chance to have steady care the place the affected person lives, relatively than having to come back into an workplace or get on a Zoom, that’s supported by subtle fashions that really have confirmed therapeutic worth … [is] vital,” he additionally stated.

However these fashions, that are constructed on each good data and misinformation, are usually not with out dangers, the physician admitted.

With the growing psychological well being challenges within the nation and the widespread scarcity of psychological well being suppliers, some folks consider docs will begin utilizing ChatGPT to work together with sufferers to “fill gaps.” (iStock)

“The obvious threat is for [these models] to provide actually lethal recommendation … and that will be disastrous,” he stated.

To reduce these dangers, the fashions would wish to filter out misinformation or add some checks on the information to take away any probably dangerous recommendation, stated Dr. Marci.

Different suppliers see potential however urge warning

Dr. Cameron Caswell, an adolescent psychiatrist in Washington, D.C., has seen firsthand the battle suppliers face in maintaining with the rising want for psychological well being care.

“I’ve talked to individuals who have been wait-listed for months, can’t discover anybody that accepts their insurance coverage or aren’t capable of join with an expert that meets their particular wants,” she informed Fox Information Digital.

“They need assist, however can’t appear to get it. This solely provides to their emotions of hopelessness and despair.”

Even so, Dr. Caswell is skeptical that AI is the reply.

“Applications like ChatGPT are phenomenal at offering data, analysis, methods and instruments, which may be helpful in a pinch,” she stated.

“Nevertheless, know-how doesn’t present what folks want probably the most: empathy and human connection.”

Physicians can use knowledge from AI to trace tendencies in despair, anxiousness and different psychological well being problems, stated Dr. Carl Marci from medical tech firm OM1. However one other knowledgeable stated, “Know-how doesn’t present what folks want probably the most: empathy and human connection.” (iStock)

“Whereas AI can present constructive reminders and immediate calming methods, I fear that if it’s used to self-diagnose, it is going to result in misdiagnosing, mislabeling and mistreating behaviors,” she continued.

“That is more likely to exacerbate issues, not remediate them.”

CLICK HERE TO SIGN UP FOR OUR HEALTH NEWSLETTER

Dr. Marcum of Orange County, California, stated he sees AI as being a useful software between periods — or as a approach to provide training a few prognosis.

“It could additionally assist clinicians with documentation or report writing, which may probably assist unencumber time to serve extra purchasers all through the week,” she informed Fox Information Digital.

CLICK HERE TO GET THE FOX NEWS APP

There are ongoing moral issues, nevertheless — together with privateness, safety of knowledge and accountability, which nonetheless must be developed additional, she stated.

“I feel we will certainly see a pattern towards using AI in treating mental health,” stated Dr. Marcum.

“However the actual panorama for the way it will form the sector has but to be decided.”

[ad_2]

Source link