[ad_1]

Along with writing articles, songs and code in mere seconds, ChatGPT might probably make its manner into your physician’s workplace — if it hasn’t already.

The artificial intelligence-based chatbot, launched by OpenAI in December 2022, is a pure language processing (NLP) mannequin that attracts on data from the net to supply solutions in a transparent, conversational format.

Whereas it’s not supposed to be a supply of personalised medical recommendation, sufferers are in a position to make use of ChatGPT to get data on ailments, medicines and different well being subjects.

CHATGPT AND HEALTH CARE: COULD THE AI CHATBOT CHANGE THE PATIENT EXPERIENCE?

Some consultants even imagine the know-how might assist physicians present more efficient and thorough patient care.

Dr. Tinglong Dai, professor of operations administration on the Johns Hopkins Carey Enterprise College in Baltimore, Maryland, and an skilled in synthetic intelligence, stated that enormous language fashions (LLMs) like ChatGPT have “upped the sport” in medical AI.

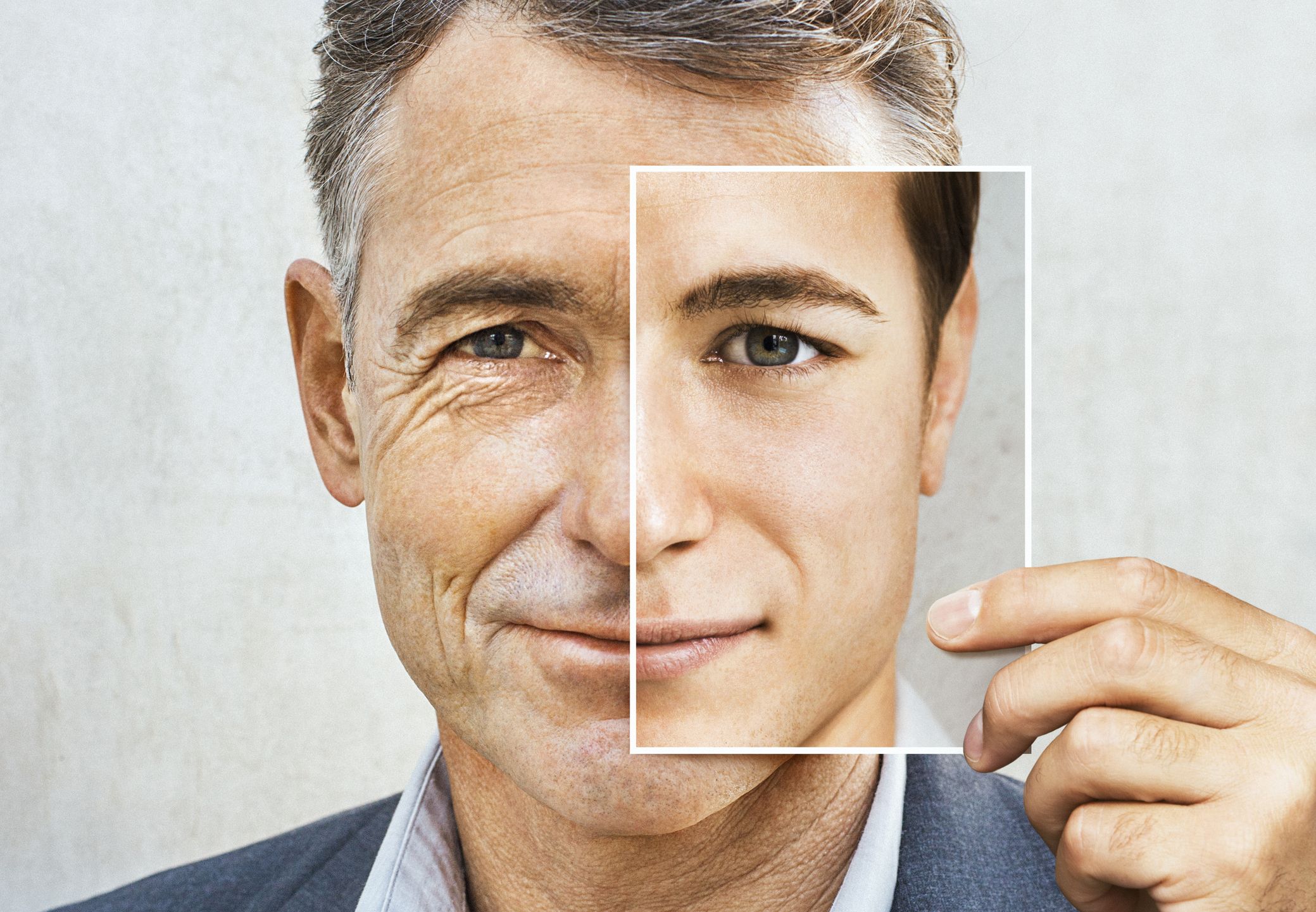

Some consultants imagine that the ChatGPT synthetic intelligence chatbot might assist physicians present extra environment friendly and thorough affected person care. (iStock)

“The AI we see within the hospital right now is purpose-built and skilled on knowledge from particular illness states — it usually cannot adapt to new situations and new conditions, and may’t use medical data bases or carry out primary reasoning duties,” he informed Fox Information Digital in an e-mail.

“LLMs give us hope that common AI is feasible on the planet of well being care.”

Scientific choice help

One potential use for ChatGPT is to offer scientific choice help to docs and medical professionals, aiding them in choosing the suitable remedy choices for sufferers.

In a preliminary examine from Vanderbilt College Medical Middle, researchers analyzed the standard of 36 AI-generated options and 29 human-generated options relating to scientific choices.

Out of the 20 highest-scoring responses, 9 of them got here from ChatGPT.

“The options generated by AI had been discovered to supply distinctive views and had been evaluated as extremely comprehensible and related, with average usefulness, low acceptance, bias, inversion and redundancy,” the researchers wrote within the examine findings, which had been printed within the Nationwide Library of Medication.

Dai famous that docs can enter medical information from quite a lot of sources and codecs — together with photographs, movies, audio recordings, emails and PDFs — into giant language fashions like ChatGPT to get second opinions.

AI HEALTH CARE PLATFORM PREDICTS DIABETES WITH HIGH ACCURACY BUT ‘WON’T REPLACE PATIENT CARE’

“It additionally signifies that suppliers can construct extra environment friendly and efficient affected person messaging portals that perceive what sufferers want and direct them to probably the most acceptable events or reply to them with automated responses,” he added.

Dr. Justin Norden, a digital well being and AI skilled who’s an adjunct professor at Stanford College in California, stated he is heard senior physicians say that ChatGPT might be “nearly as good or higher” than most interns throughout their first 12 months out of medical college.

One potential use for ChatGPT is to offer scientific choice help to docs and medical professionals, aiding them in choosing the suitable remedy choices for sufferers. (iStock)

“We’re seeing medical plans generated in seconds,” he informed Fox Information Digital in an interview.

“These instruments can be utilized to attract related data for a supplier, to behave as a type of ‘co-pilot’ to assist somebody suppose by means of different issues they might think about.”

Well being training

Norden is particularly enthusiastic about ChatGPT’s potential use for well being training in a scientific setting.

“I believe one of many superb issues about these instruments is you could take a physique of data and rework what it appears to be like like for a lot of totally different audiences, languages and studying comprehension ranges,” he stated.

“At the moment, ChatGPT has a really excessive threat of being ‘unacceptably mistaken’ far too usually.”

For instance, ChatGPT might allow physicians to totally clarify complex medical concepts and coverings to every affected person in a manner that’s digestible and simple to know, stated Norden.

“For instance, after having a process, the affected person might chat with that physique of data and ask follow-up questions,” Norden stated.

Administrative duties

The bottom-hanging fruit for utilizing ChatGPT in well being care, stated Norden, is to streamline administrative duties, which is a “enormous time element” for medical suppliers.

Specifically, he stated some suppliers wish to the chatbot to streamline medical notes and documentation.

“On the scientific aspect, persons are already beginning to experiment with GPT fashions to assist with writing notes, drafting affected person summaries, evaluating affected person severity scores and discovering scientific data shortly,” he stated.

Some consultants imagine that AI language fashions comparable to ChatGPT might probably assist streamline affected person discharge directions. (iStock)

“Moreover, on the executive aspect, it’s getting used for prior authorization, billing and coding, and analytics,” Norden added.

Two medical tech firms which have made vital headway into these purposes are Doximity and Nuance, Norden identified.

Doximity, an expert medical community for physicians headquartered in San Francisco, launched its DocsGPT platform to assist docs write letters of medical necessity, denial appeals and different medical paperwork.

ARTIFICIAL INTELLIGENCE IN HEALTH CARE: NEW PRODUCT ACTS AS ‘COPILOT FOR DOCTORS’

Nuance, a Microsoft firm primarily based in Massachusetts that creates AI-powered well being care options, is piloting its GPT4-enabled note-taking program.

The plan is to start out with a smaller subset of beta customers and steadily roll out the system to its 500,000+ customers, stated Norden.

Whereas he believes these kind of instruments are nonetheless in want of regulatory “guard rails,” he sees an enormous potential for one of these use, each inside and out of doors well being care.

“If I’ve an enormous database or pile of paperwork, I can ask a pure query and begin to pull out related items of data — giant language fashions have proven they’re excellent at that,” he stated.

Affected person discharges

The hospital discharge course of includes many steps, together with assessing the affected person’s medical situation, figuring out follow-up care, prescribing and explaining medicines, offering life-style restrictions and extra, in line with Johns Hopkins.

AI language fashions like ChatGPT might probably assist streamline affected person discharge directions, Norden believes.

“That is extremely vital, particularly for somebody who has been within the hospital for some time,” he informed Fox Information Digital.

Sufferers “might need lots of new medications, issues they need to do and comply with up on, and so they’re usually left with [a] few items of printed paper and that’s it.”

He added, “Giving somebody way more data in a language that they perceive, in a format they will proceed to work together with, I believe is admittedly highly effective.”

Privateness and accuracy cited as huge dangers

Whereas ChatGPT might probably streamline routine well being care duties and improve suppliers’ entry to huge quantities of medical knowledge, it’s not with out dangers, in line with consultants.

Dr. Tim O’Connell, the vice chair of medical informatics within the division of radiology on the University of British Columbia, stated there’s a critical privateness threat when customers copy and paste sufferers’ scientific notes right into a cloud-based service like ChatGPT.

“We would like medical AI software program to be reliable.”

“In contrast to ChatGPT, most scientific NLP options are deployed right into a safe set up in order that delicate knowledge just isn’t shared with anybody exterior the group,” he informed Fox Information Digital.

“Each Canada and Italy have introduced that they’re investigating OpenAI [ChatGPT’s parent corporation] to see if they’re amassing or utilizing private data inappropriately.”

Moreover, O’Connell stated the danger of ChatGPT producing false data might be harmful.

Well being care suppliers typically categorize errors as “acceptably mistaken” or “unacceptably mistaken,” he stated.

Whereas ChatGPT might probably streamline routine well being care duties and improve suppliers’ entry to huge quantities of medical knowledge, it’s not with out dangers, consultants say. (Gabby Jones/Bloomberg through Getty Photos)

“An instance of ‘acceptably mistaken’ can be for a system to not acknowledge a phrase as a result of a care supplier used an ambiguous acronym,” he defined.

“An ‘unacceptably mistaken’ state of affairs can be the place a system makes a mistake that any human — even one who just isn’t a skilled skilled — wouldn’t make.”

“It’s laborious to see how a language era engine can present any such ensures.”

This may imply making up ailments the affected person by no means had — or having a chatbot grow to be aggressive with a affected person or give them unhealthy recommendation that will hurt them, stated O’Connell, who can be CEO of Emtelligent, a Vancouver, British Columbia-based medical technology firm that is created an NLP engine for medical textual content.

CLICK HERE TO SIGN UP FOR OUR HEALTH NEWSLETTER

“At the moment, ChatGPT has a really excessive threat of being ‘unacceptably mistaken’ far too usually,” he added. “The truth that ChatGPT can invent info that look believable has been famous by many as one of many greatest issues with using this know-how in well being care.”

CLICK HERE TO GET THE FOX NEWS APP

“We would like medical AI software program to be reliable, and to offer solutions which are explainable or will be verified to be true by the consumer, and produce output that’s trustworthy to the info with none bias,” he continued.

“For the time being, ChatGPT doesn’t but do effectively on these measures, and it’s laborious to see how a language era engine can present any such ensures.”

[ad_2]

Source link