[ad_1]

Artificial intelligence is opening the door to a disturbing pattern of individuals creating practical photos of youngsters in sexual settings, which may enhance the variety of circumstances of intercourse crimes in opposition to youngsters in actual life, specialists warn.

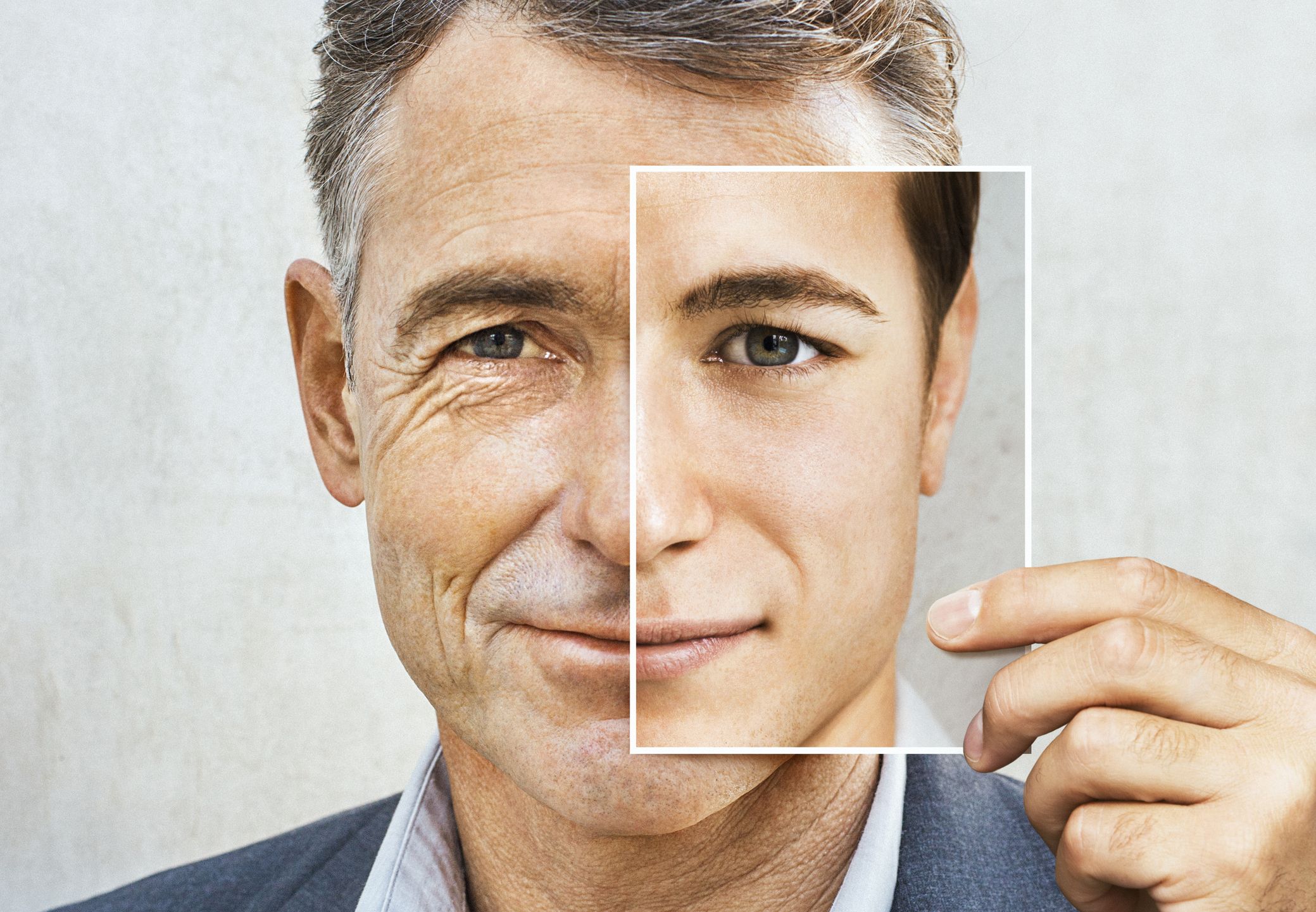

AI platforms that may mimic human dialog or create practical photos exploded in recognition late final yr into 2023 following the discharge of chatbot ChatGPT, which served as a watershed second for the usage of synthetic intelligence. Because the curiosity of individuals internationally was piqued by the know-how for work or faculty duties, others have embraced the platforms for extra nefarious functions.

The Nationwide Crime Company, which is the UK’s lead company combating organized crime, warned this week that the proliferation of machine-generated express photos of youngsters is having a “radicalizing” impact “normalizing” pedophilia and disturbing habits in opposition to youngsters.

“We assess that the viewing of those photos – whether or not actual or AI-generated – materially will increase the chance of offenders transferring on to sexually abusing youngsters themselves,” the NCA’s director normal, Graeme Biggar, mentioned in a latest report.

AI ‘DEEPFAKES’ OF INNOCENT IMAGES FUEL SPIKE IN SEXTORTION SCAMS, FBI WARNS

Graeme Biggar, Director Common of the Nationwide Crime Company (NCA), throughout a Northern Eire Policing Board assembly at James Home, Belfast. Image date: Thursday June 1, 2023. (Picture by Liam McBurney/PA Photographs through Getty Photographs) (Getty Photographs )

The company estimates there are as much as 830,000 adults, or 1.6% of the grownup inhabitants within the UK that pose some sort of sexual hazard in opposition to youngsters. The estimated determine is ten instances higher than the UK’s jail inhabitants, based on Biggar.

Nearly all of child sexual abuse cases contain viewing express photos, based on Biggar, and with the assistance of AI, creating and viewing sexual photos may “normalize” abusing youngsters in the true world.

ARTIFICIAL INTELLIGENCE CAN DETECT ‘SEXTORTION’ BEFORE IT HAPPENS AND HELP FBI: EXPERT

“[The estimated figures] partly mirror a greater understanding of a menace that has traditionally been underestimated, and partly an actual enhance attributable to the radicalising impact of the web, the place the widespread availability of movies and pictures of youngsters being abused and raped, and teams sharing and discussing the pictures, has normalised such behaviour,” Biggar mentioned.

Synthetic intelligence illustrations are seen on a lapto with books within the background on this illustration picture on 18 July, 2023. (Picture by Jaap Arriens/NurPhoto through Getty Photographs) (Getty Photographs )

Stateside, an identical explosion of utilizing AI to create sexual photos of youngsters is unfolding.

“Youngsters’s photos, together with the content material of recognized victims, are being repurposed for this actually evil output,” Rebecca Portnoff, the director of knowledge science at a nonprofit that works to protect kids, Thorn, informed the Washington Submit final month.

CANADIAN MAN SENTENCED TO PRISON OVER AI-GENERATED CHILD PORNOGRAPHY: REPORT

“Sufferer identification is already a needle-in-a-haystack downside, the place regulation enforcement is looking for a toddler in hurt’s means,” she mentioned. “The convenience of utilizing these instruments is a major shift, in addition to the realism. It simply makes every part extra of a problem.”

Standard AI websites that may create photos primarily based on easy prompts usually have neighborhood tips stopping the creation of disturbing photographs.

Teenaged lady in darkish room. (Getty Photographs )

Such platforms are educated on hundreds of thousands of photos from throughout the web that function constructing blocks for AI to create convincing depictions of individuals or places that don’t truly exist.

LAWYERS BRACE FOR AI’S POTENTIAL TO UPEND COURT CASES WITH PHONY EVIDENCE

Midjourney, for instance, requires PG-13 content material that avoids “nudity, sexual organs, fixation on bare breasts, individuals in showers or on bathrooms, sexual imagery, fetishes.” Whereas DALL-E, OpenAI’s picture creator platform, solely permits G-rated content material, prohibiting photos that present “nudity, sexual acts, sexual companies, or content material in any other case meant to arouse sexual pleasure.” Darkish net boards of individuals with unwell intentions talk about work-arounds to create disturbing photos, nonetheless, based on various reports on AI and sex crimes.

Police automobile with 911 signal. (Getty Photographs )

Biggar famous that the AI-generated photos of youngsters additionally throws police and law enforcement right into a maze of deciphering pretend photos from these of actual victims who want help.

“Using AI for baby sexual abuse will make it more durable for us to establish actual youngsters who want defending, and additional normalise abuse,” the NCA director normal mentioned.

AI-generated photos will also be utilized in sextortion scams, with the FBI issuing a warning on the crimes final month.

Deepfakes usually contain modifying movies or photographs of individuals to make them appear to be another person through the use of deep-learning AI, and have been used to harass victims or acquire cash, together with youngsters.

FBI WARNS OF AI DEEPFAKES BEING USED TO CREATE ‘SEXTORTION’ SCHEMES

“Malicious actors use content manipulation technologies and companies to take advantage of photographs and movies—usually captured from a person’s social media account, open web, or requested from the sufferer—into sexually-themed photos that seem true-to-life in likeness to a sufferer, then flow into them on social media, public boards, or pornographic web sites,” the FBI mentioned in June.

CLICK HERE TO GET THE FOX NEWS APP

“Many victims, which have included minors, are unaware their photos have been copied, manipulated, and circulated till it was dropped at their consideration by another person.”

[ad_2]

Source link