[ad_1]

The synthetic intelligence area wants a global watchdog to manage future superintelligence, in accordance with the founder of OpenAI.

In a weblog put up from CEO Sam Altman and firm leaders Greg Brockman and Ilya Sutskever, the group mentioned – given potential existential threat – the world “cannot simply be reactive,” evaluating the tech to nuclear vitality.

To that finish, they urged coordination amongst main growth efforts, highlighting that there are “some ways this might be carried out,” together with a challenge arrange by main governments or curbs on annual development charges.

“Second, we’re more likely to finally want one thing like an IAEA for superintelligence efforts; any effort above a sure functionality (or assets like compute) threshold will should be topic to a global authority that may examine techniques, require audits, take a look at for compliance with security requirements, place restrictions on levels of deployment and ranges of safety, and many others.” they asserted.

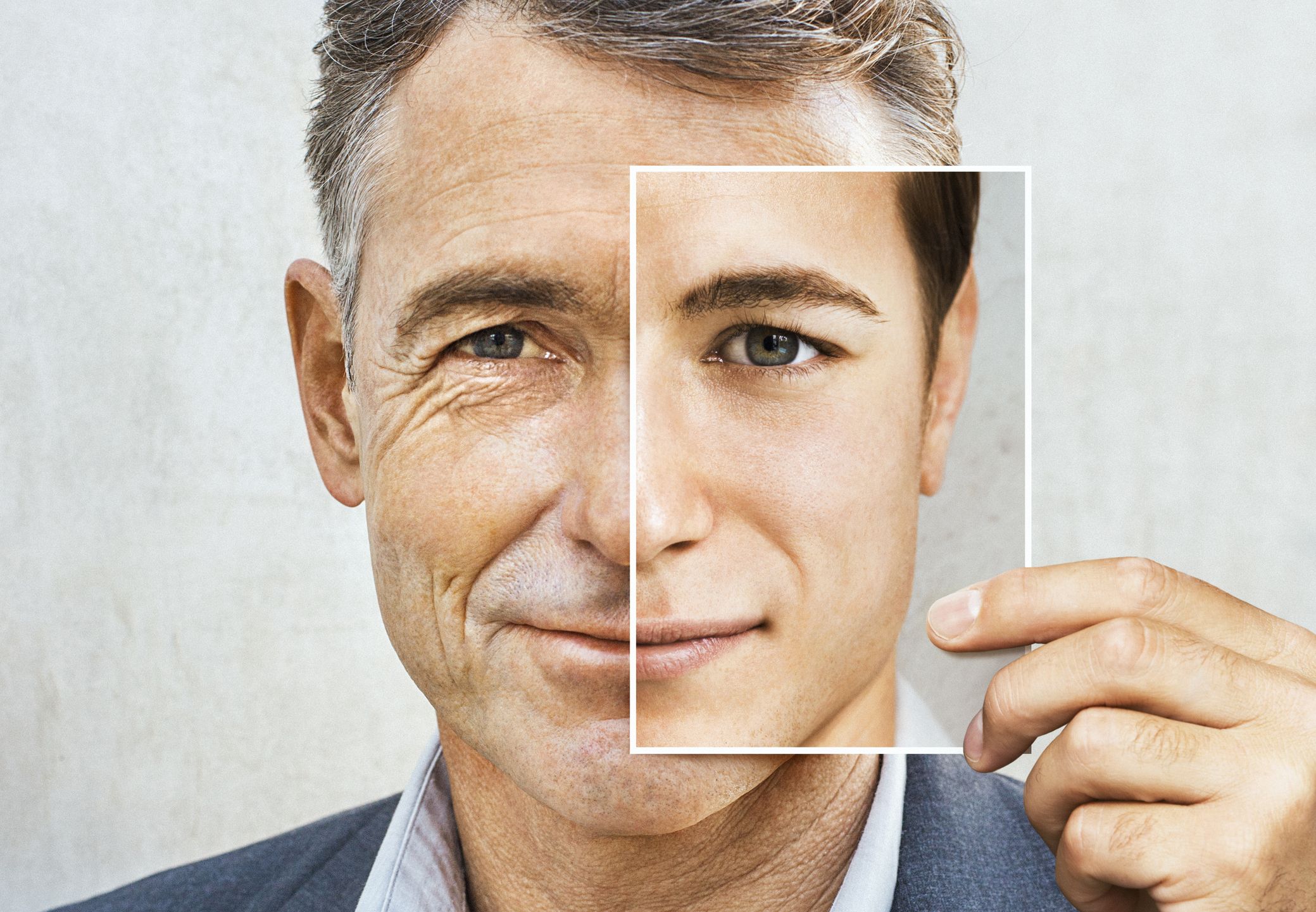

AI COULD GROW SO POWERFUL IT REPLACES EXPERIENCED PROFESSIONALS WITHIN 10 YEARS, SAM ALTMAN WARNS

Sam Altman, chief government officer of OpenAI, throughout a fireplace chat at College School London, United Kingdom, on Wednesday, Might 24, 2023. (Chris J. Ratcliffe/Bloomberg by way of Getty Pictures)

The Worldwide Atomic Vitality Company is the worldwide heart for cooperation within the nuclear area, of which the U.S. is a member state.

The authors mentioned monitoring computing and vitality utilization may go a great distance.

“As a primary step, firms may voluntarily agree to start implementing components of what such an company would possibly in the future require, and as a second, particular person nations may implement it. It could be necessary that such an company deal with lowering existential threat and never points that ought to be left to particular person nations, equivalent to defining what an AI ought to be allowed to say,” the weblog continued.

Thirdly, they mentioned they wanted the technical functionality to make a “superintelligence protected.”

The OpenAI brand on a smartphone within the Brooklyn on Jan. 12, 2023. (Gabby Jones/Bloomberg by way of Getty Pictures)

Whereas there are some sides which can be “not in scope” – together with permitting growth of fashions beneath a big functionality threshold “with out the type of regulation” they described and that techniques they’re “involved about” shouldn’t be watered down by “making use of comparable requirements to expertise far beneath this bar” – they mentioned the governance of essentially the most highly effective techniques will need to have sturdy public oversight.

Sam Altman speaks throughout a Senate Judiciary Subcommittee listening to in Washington, D.C., on Tuesday, Might 16, 2023. (Eric Lee/Bloomberg by way of Getty Pictures)

“We consider folks all over the world ought to democratically resolve on the bounds and defaults for AI techniques. We do not but know the right way to design such a mechanism, however we plan to experiment with its growth. We proceed to suppose that, inside these vast bounds, particular person customers ought to have lots of management over how the AI they use behaves,” they mentioned.

The trio believes it’s conceivable that AI systems will exceed expert skill level in most domains inside the subsequent decade.

So, why construct AI expertise in any respect contemplating the dangers and difficulties posed by it?

CLICK HERE TO GET THE FOX NEWS APP

They declare AI will result in a “a lot better world than what we are able to think about as we speak,” and that it will be “unintuitively dangerous and troublesome to cease the creation of superintelligence.“

“As a result of the upsides are so super, the fee to construct it decreases annually, the variety of actors constructing it’s quickly rising, and it’s inherently a part of the technological path we’re on, stopping it will require one thing like a world surveillance regime, and even that isn’t assured to work. So we now have to get it proper,” they mentioned.

[ad_2]

Source link