[ad_1]

The most recent model of ChatGPT, the artificial intelligence chatbot from OpenAI, is sensible sufficient to move a radiology board-style examination, a brand new examine from the College of Toronto discovered.

GPT-4, which launched formally on March 13, 2023, appropriately answered 81% of the 150 multiple-choice questions on the examination.

Regardless of the chatbot’s excessive accuracy, the examine — printed in Radiology, a journal of the Radiological Society of North America (RSNA) — additionally detected some regarding inaccuracies.

“A radiologist is doing three issues when decoding medical photographs: in search of findings, utilizing superior reasoning to grasp the which means of the findings, after which speaking these findings to sufferers and different physicians,” defined lead writer Rajesh Bhayana, M.D., an stomach radiologist and expertise lead at College Medical Imaging Toronto, Toronto Common Hospital in Toronto, Canada, in an announcement to Fox Information Digital.

The most recent model of ChatGPT, the synthetic intelligence chatbot from OpenAI, is sensible sufficient to move a radiology board-style examination, a brand new examine from the College of Toronto has discovered. (iStock)

“Most AI analysis in radiology has targeted on laptop imaginative and prescient, however language fashions like ChatGPT are basically performing steps two and three (the superior reasoning and language duties),” she went on.

“Our analysis offers perception into ChatGPT’s efficiency in a radiology context, highlighting the unimaginable potential of enormous language fashions, together with the present limitations that make it unreliable.”

CHATGPT FOR HEALTH CARE PROVIDERS: CAN THE AI CHATBOT MAKE THE PROFESSIONALS’ JOBS EASIER?

The researchers created the questions in a manner that mirrored the fashion, content material and problem of the Canadian Royal Faculty and American Board of Radiology exams, in keeping with a dialogue of the examine within the medical journal.

(As a result of ChatGPT doesn’t but settle for photographs, the researchers had been restricted to text-based questions.)

The questions had been then posed to 2 totally different versions of ChatGPT: GPT-3.5 and the newer GPT-4.

‘Marked enchancment’ in superior reasoning

The GPT-3.5 model of ChatGPT answered 69% of questions appropriately (104 of 150), close to the passing grade of 70% utilized by the Royal Faculty in Canada, in keeping with the examine findings.

It struggled probably the most with questions involving “higher-order considering,” comparable to describing imaging findings.

“A radiologist is doing three issues when decoding medical photographs: in search of findings, utilizing superior reasoning to grasp the which means of the findings, after which speaking these findings to sufferers and different physicians,” mentioned the lead writer of a brand new examine (not pictured). (iStock)

As for GPT-4, it answered 81% (121 of 150) of the identical questions appropriately — exceeding the passing threshold of 70%.

The newer model did significantly better at answering the higher-order considering questions.

“The aim of the examine was to see how ChatGPT carried out within the context of radiology — each in superior reasoning and fundamental data,” Bhayana mentioned.

GPT-4 answered 81% of the questions appropriately, exceeding the passing threshold of 70%.

“GPT-4 carried out very effectively in each areas, and demonstrated improved understanding of the context of radiology-specific language — which is important to allow the extra superior instruments that radiology physicians can use to be extra environment friendly and efficient,” she added.

The researchers had been shocked by GPT-4’s “marked enchancment” in superior reasoning capabilities over GPT-3.5.

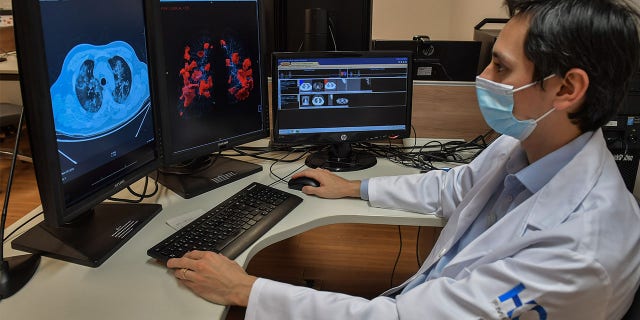

“Our findings spotlight the rising potential of those fashions in radiology, but in addition in different areas of drugs,” mentioned Bhayana.

“Our findings spotlight the rising potential of those fashions in radiology, but in addition in different areas of drugs,” mentioned the lead writer of a brand new examine. (NELSON ALMEIDA/AFP through Getty Photos)

Dr. Harvey Castro, a Dallas, Texas-based board-certified emergency drugs doctor and nationwide speaker on synthetic intelligence in well being care, was not concerned within the examine however reviewed the findings.

“The leap in efficiency from GPT-3.5 to GPT-4 could be attributed to a extra in depth coaching dataset and an elevated emphasis on human reinforcement studying,” he instructed Fox Information Digital.

“This expanded coaching allows GPT-4 to interpret, perceive and make the most of embedded data extra successfully,” he added.

CHATGPT AND HEALTH CARE: COULD THE AI CHATBOT CHANGE THE PATIENT EXPERIENCE?

Getting a better rating on a standardized take a look at, nevertheless, does not essentially equate to a extra profound understanding of a medical topic comparable to radiology, Castro identified.

“It exhibits that GPT-4 is healthier at sample recognition based mostly on the huge quantity of data it has been educated on,” he mentioned.

Way forward for ChatGPT in well being care

Many health technology experts, together with Bhayana, imagine that enormous language fashions (LLMs) like GPT-4 will change the best way folks work together with expertise on the whole — and extra particularly in drugs.

“They’re already being included into engines like google like Google, digital medical data like Epic, and medical dictation software program like Nuance,” she instructed Fox Information Digital.

“However there are numerous extra superior purposes of those instruments that can transform health care even additional.”

“The leap in efficiency from GPT-3.5 to GPT-4 could be attributed to a extra in depth coaching dataset and an elevated emphasis on human reinforcement studying,” Dr. Harvey Castro, a board-certified emergency doctor and nationwide speaker on AI in well being care, instructed Fox Information Digital. (Jakub Porzycki/NurPhoto)

Sooner or later, Bhayana believes these fashions might reply affected person questions precisely, assist physicians make diagnoses and information remedy choices.

Honing in on radiology, she predicted that LLMs might assist increase radiologists’ skills and make them extra environment friendly and efficient.

“We aren’t but fairly there but — the fashions will not be but dependable sufficient to make use of for medical follow — however we’re shortly shifting in the proper path,” she added.

Limitations of ChatGPT in drugs

Maybe the largest limitation of LLMs in radiology is their lack of ability to interpret visible knowledge, which is a important side of radiology, Castro mentioned.

Large language models (LLMs) like ChatGPT are additionally identified for his or her tendency to “hallucinate,” which is after they present inaccurate info in a confident-sounding manner, Bhayana identified.

“The fashions will not be but dependable sufficient to make use of for medical follow.”

“These hallucinations decreased in GPT-4 in comparison with 3.5, but it surely nonetheless happens too regularly to be relied on in medical follow,” she mentioned.

“Physicians and sufferers ought to pay attention to the strengths and limitations of those fashions, together with realizing that they can’t be relied on as a sole supply of data at current,” Bhayana added.

“Physicians and sufferers ought to pay attention to the strengths and limitations of those fashions, together with realizing that they can’t be relied on as a sole supply of data at current.” (Frank Rumpenhorst/image alliance through Getty Photos)

Castro agreed that whereas LLMs could have sufficient data to move checks, they will’t rival human physicians in relation to figuring out sufferers’ diagnoses and creating remedy plans.

“Standardized exams, together with these in radiology, typically concentrate on ‘textbook’ circumstances,” he mentioned.

“However in medical follow, sufferers not often current with textbook signs.”

CLICK HERE TO GET THE FOX NEWS APP

Each affected person has distinctive signs, histories and private components that will diverge from “commonplace” circumstances, mentioned Castro.

“This complexity typically requires nuanced judgment and decision-making, a capability that AI — together with superior fashions like GPT-4 — at the moment lacks.”

CLICK HERE TO SIGN UP FOR OUR HEALTH NEWSLETTER

Whereas the improved scores of GPT-4 are promising, Castro mentioned, “a lot work have to be accomplished to make sure that AI instruments are correct, secure and beneficial in a real-world medical setting.”

[ad_2]

Source link