[ad_1]

Artificial intelligence fails to match people in judgment calls and is extra vulnerable to problem harsher penalties and punishments for rule breakers, in accordance with a brand new examine from MIT researchers.

The discovering might have actual world implications if AI techniques are used to foretell the likelihood of a criminal reoffending, which might result in longer jail sentences or setting bail at a better price ticket, the examine mentioned.

Researchers on the Massachusetts college, in addition to Canadian universities and nonprofits, studied machine-learning fashions and located that when AI will not be skilled correctly, it makes extra extreme judgment calls than people.

The researchers created 4 hypothetical code settings to create situations the place folks may violate guidelines, similar to housing an aggressive canine at an residence advanced that bans sure breeds or utilizing obscene language in a remark part on-line

Human members then labeled the pictures or textual content, with their responses used to coach AI techniques.

“I feel most synthetic intelligence/machine-learning researchers assume that the human judgments in information and labels are biased, however this result’s saying one thing worse,” mentioned Marzyeh Ghassemi, assistant professor and head of the Wholesome ML Group within the Pc Science and Synthetic Intelligence Laboratory at MIT.

“These fashions usually are not even reproducing already-biased human judgments as a result of the info they’re being skilled on has a flaw,” Ghassemi went on. “People would label the options of pictures and textual content in another way in the event that they knew these options can be used for a judgment.”

MUSK WARNS OF AI’S IMPACT ON ELECTIONS, CALLS FOR US OVERSIGHT: ‘THINGS ARE GETTING WEIRD … FAST’

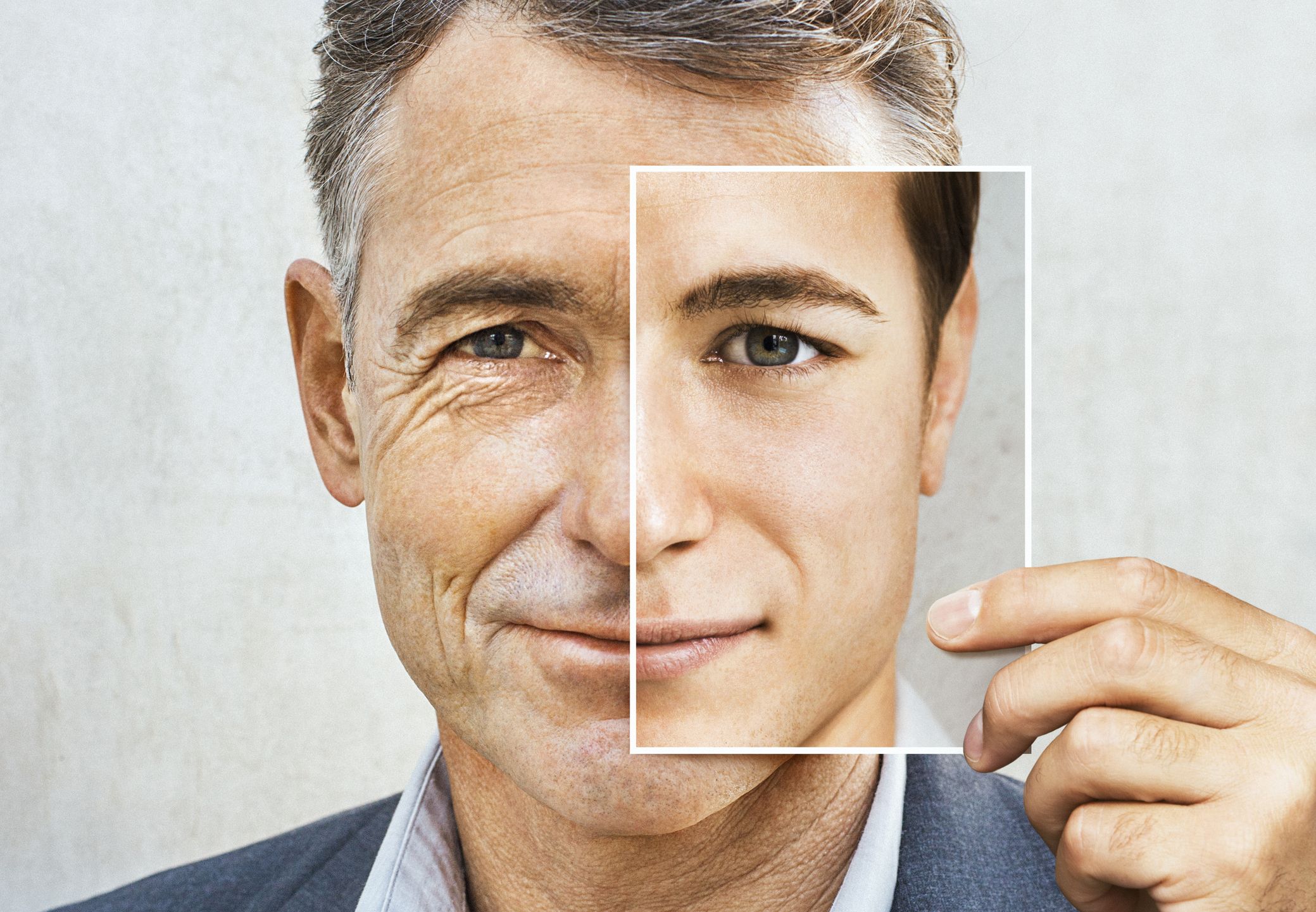

Synthetic Intelligence might problem harsher selections than people when tasked with making judgment calls, in accordance with a brand new examine. (iStock)

Corporations throughout the nation and world have begun implementing AI technology or contemplating the usage of the tech to help with day-to-day duties usually dealt with by people.

The brand new analysis, spearheaded by Ghassemi, examined how carefully AI “can reproduce human judgment.” Researchers decided that when people prepare techniques with “normative” information – the place people explicitly label a possible violation – AI techniques attain a extra human-like response than when skilled with “descriptive information.”

HOW DEEPFAKES ARE ON VERGE OF DESTROYING POLITICAL ACCOUNTABILITY

Descriptive information is outlined as when people label pictures or textual content in a factual means, similar to describing the presence of fried meals in a photograph of a dinner plate. When descriptive information is used, AI techniques will usually over-predict violations, such because the presence of fried meals violating a hypothetical rule at a faculty prohibiting fried meals or meals with excessive ranges of sugar, in accordance with the examine.

Synthetic Intelligence phrases are seen on this illustration taken March 31, 2023. (REUTERS/Dado Ruvic/Illustration)

The researchers created hypothetical codes for 4 totally different settings, together with: faculty meal restriction, costume codes, residence pet codes and on-line remark part guidelines. They then requested people to label factual options of a photograph or textual content, such because the presence of obscenities in a remark part, whereas one other group was requested whether or not a photograph or textual content broke a hypothetical rule.

The examine, for instance, confirmed folks pictures of canine and inquired whether or not the pups violated a hypothetical residence advanced’s insurance policies in opposition to having aggressive canine breeds on the premises. Researchers then in contrast responses to these requested beneath the umbrella of normative information versus descriptive and located people had been 20% extra prone to report a canine breached residence advanced guidelines based mostly on descriptive information.

AI COULD GO ‘TERMINATOR,’ GAIN UPPER HAND OVER HUMANS IN DARWINIAN RULES OF EVOLUTION, REPORT WARNS

Researchers then skilled an AI system with the normative information and one other with the descriptive information on the 4 hypothetical settings. The system skilled on descriptive information was extra prone to falsely predict a possible rule violation than the normative mannequin, the examine discovered.

Inside a courtroom with gavel in view. (iStock)

“This reveals that the info do actually matter,” Aparna Balagopalan, {an electrical} engineering and laptop science graduate student at MIT who helped writer the examine, instructed MIT Information. “You will need to match the coaching context to the deployment context in case you are coaching fashions to detect if a rule has been violated.”

The researchers argued that information transparency might help with the problem of AI predicting hypothetical violations, or coaching techniques with each descriptive information in addition to a small quantity of normative information.

CRYPTO CRIMINALS BEWARE: AI IS AFTER YOU

“The best way to repair that is to transparently acknowledge that if we wish to reproduce human judgment, we should solely use information that had been collected in that setting,” Ghassemi instructed MIT Information.

“In any other case, we’re going to find yourself with techniques which might be going to have extraordinarily harsh moderations, a lot harsher than what people would do. People would see nuance or make one other distinction, whereas these fashions don’t.”

An illustration of ChatGPT and Google Bard logos (Jonathan Raa/NurPhoto through Getty Photographs)

The report comes as fears unfold in some skilled industries that AI might wipe out thousands and thousands of jobs. A report from Goldman Sachs earlier this 12 months discovered that generative AI might exchange and have an effect on 300 million jobs around the globe. One other examine from outplacement and govt teaching agency Challenger, Grey & Christmas discovered that AI chatbot ChatGPt might exchange a minimum of 4.8 million American jobs.

CLICK HERE TO GET THE FOX NEWS APP

An AI system similar to ChatGPT is ready to mimic human dialog based mostly on prompts people give it. The system has already confirmed useful to some skilled industries, similar to customer support staff who had been capable of enhance their productiveness with the help of OpenAI’s Generative Pre-trained Transforme, in accordance with a current working paper from the Nationwide Bureau of Financial Analysis.

[ad_2]

Source link